Difference between revisions of "Tweepository"

(→Configuring a Web Observatory) |

(→Exporting to a Web Observatory) |

||

| (One intermediate revision by the same user not shown) | |||

| Line 196: | Line 196: | ||

perl -I/<eprints_root>/perl_lib /<eprints_root>/archives/<repositoryid>/bin/tweepository/web_observatory_test.pl <repositoryid> <webobservatoryid> | perl -I/<eprints_root>/perl_lib /<eprints_root>/archives/<repositoryid>/bin/tweepository/web_observatory_test.pl <repositoryid> <webobservatoryid> | ||

| + | |||

| + | ==== authorised_users ==== | ||

| + | |||

| + | A tweetstream is allowed to be pushed to a Web Observatory if its the person that created the tweetstream is in the list of authorised users. | ||

=== Configuring a TweetStream === | === Configuring a TweetStream === | ||

| Line 206: | Line 210: | ||

** More that one tweetstream can be inserted into a collection | ** More that one tweetstream can be inserted into a collection | ||

** The URL of the tweepository will be prepeneded onto the collection ID. If 'foo' is the collection name, then on the Web Observatory, the collection will be (e.g.) 'http://www.mytweepository.com/foo' | ** The URL of the tweepository will be prepeneded onto the collection ID. If 'foo' is the collection name, then on the Web Observatory, the collection will be (e.g.) 'http://www.mytweepository.com/foo' | ||

| + | |||

| + | |||

| + | === Nightly Export === | ||

| + | |||

| + | The web_observatory_push.pl script needs to be added to the crontab to run nightly. It should be run after the update_tweetstream_abstracts.pl script. Note that it is blocked by update_tweetstream_abstracts, so will only start doing actual work when that script has terminated. | ||

== Troubleshooting == | == Troubleshooting == | ||

Latest revision as of 12:22, 18 December 2015

The Tweepository plugin enables the repository to harvest a stream of tweets from a twitter search. This document applies to Tweepository 0.3.1.

Contents

Installation Prerequisites

The following perl libraries must be installed on the server before the Bazaar package will function.

Archive::Zip Archive::Zip::MemberRead Data::Dumper Date::Calc Date::Parse Encode File::Copy File::Path HTML::Entities JSON LWP::UserAgent Number::Bytes::Human Storable URI URI::Find

Installation

Install through the EPrints Bazaar

Add Twitter API Keys

Create a file in your repository's cfg.d directory for your twitter API keys (e.g. called z_tweepository_oath.pl). The content should look like this:

$c->{twitter_oauth}->{consumer_key} = 'xxxxxxxxxxxx';

$c->{twitter_oauth}->{consumer_secret} = 'xxxxxxxxxxxxxxxxxxxxxxxx';

$c->{twitter_oauth}->{access_token} = 'xxxxxxxxxxxx-xxxxxxxxxxxxxxxxxxxxxxxx';

$c->{twitter_oauth}->{access_token_secret} = 'xxxxxxxxxxxxxxxxxxxxxxxx';

Setting Up Cron Jobs

There are three processes that need to be regularly run. Due to the heavyweight nature of these tasks, they should be put into the crontab rather than being handled by the EPrints Indexer. However, they have been created as event plugins for future inclusion in the indexer. Scripts have been created to wrap the plugins

Running these scripts will result in log files and a cache being created in the repository's 'var' directory. These should be checked if there are any issues with harvesting.

add the following to your eprints crontab (assuming EPrints is installed in '/opt/eprints3'):

0,30 * * * * perl -I/opt/eprints3/perl_lib /opt/eprints3/archives/REPOSITORYID/bin/update_tweetstreams.pl REPOSITORYID 15 0 * * * perl -I/opt/eprints3/perl_lib /opt/eprints3/archives/REPOSITORYID/bin/update_tweetstream_abstracts.pl REPOSITORYID 45 * * * * perl -I/opt/eprints3/perl_lib /opt/eprints3/archives/REPOSITORYID/bin/export_tweetstream_packages.pl REPOSITORYID

Using

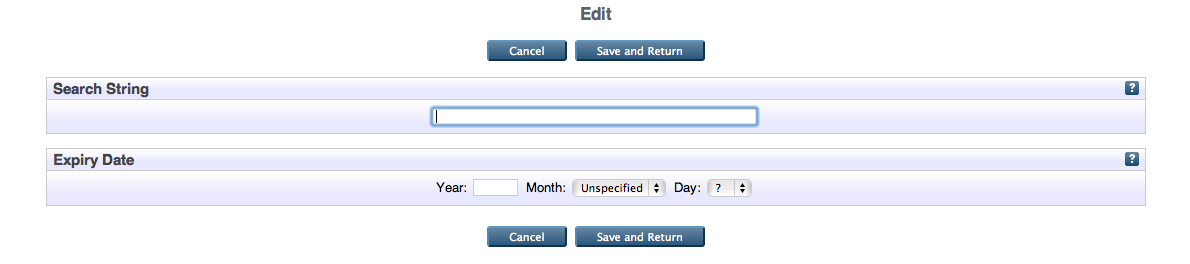

To create a new tweetstream, click on 'Manage Records', then on 'Twitter Feed', and then on the 'Create new Item' button. A new tweetstream object will be created, and you will need to enter two parameters:

- Search String: Passed directly to Twitter as the search parameter.

- Expiry Date: The date on which to stop harvesting this stream.

Note that in version 0.3.1, more metadata fields have been added, most notably a 'project' field, which the default tweetstream browse views uses.

Once these fields have been completed, click 'Save and Return'.

Harvesting

Every 20 minutes, the tweepository package will harvest each stream. No harvesting is done on creation, to the tweetstream will initially be empty. Tweets will be processed to:

- extract hashtags

- extract mentioned users

These data will be summarised in the tweetstream objects.

URLs

Note that URLs are no longer followed to expand shortened links. This is a target for future development.

Viewing a Tweetstream

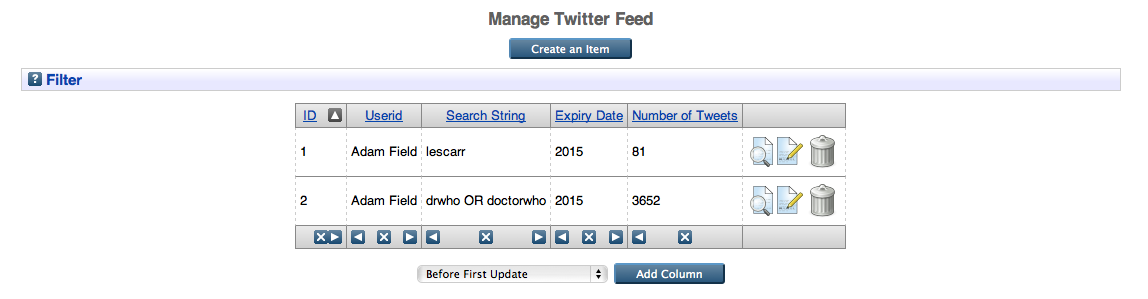

To view a tweetstream, click on 'Manage Records', then 'Twitter Feed':

The above screen shows a list of all twitter streams that the logged in user has access to. Clicking on the view icon (the magnifying glass) will bring up the view screen, which shows all metadata set on this twitter feed. At the top of the page will be a link to the tweetstream's page. It will be of the form:

http://repository.foo.com/id/tweetstream/5

Below is an example of a tweetstream page:

Exporting

Due to the architecture of the twitter feeds (see below), exporting using the standard eprints exporters (e.g. XML) will only work if both the tweet dataset and the tweetstream dataset are both exported. For this reason, export plugins have been provided for tweetstreams. Currently, a tweetstream can be exported as:

- CSV

- HTML

- JSON

Note that EPrints may struggle to export very large tweetstreams through the web interface. If there are more than several hundred thousand tweets, it may be advisable to export from the command line.

Architecture

Both tweetstreams and tweets are EPrints Data Objects. Each tweet object stores the ID of all tweetstreams to which it belongs. This allows tweets to appear in more than one stream, but only be stored once in the database.

Permissions

The z_tweepository_cfg.pl file contains the following:

$c->{roles}->{"tweetstream-admin"} = [

"datasets",

"tweetstream/view",

"tweetstream/details",

"tweetstream/edit",

"tweetstream/create",

"tweetstream/destroy",

"tweetstream/export",

];

$c->{roles}->{"tweetstream-editor"} = [

"datasets",

"tweetstream/view",

"tweetstream/details:owner",

"tweetstream/edit:owner",

"tweetstream/create",

"tweetstream/destroy:owner",

"tweetstream/export",

];

$c->{roles}->{"tweetstream-viewer"} = [

"tweetstream/view",

"tweetstream/export",

];

push @{$c->{user_roles}->{admin}}, 'tweetstream-admin';

push @{$c->{user_roles}->{editor}}, 'tweetstream-editor';

push @{$c->{user_roles}->{user}}, 'tweetstream-viewer';

This defines three roles. The admin role:

- Can create tweetstreams

- Can destroy tweetstreams

- Can see tweetstream details

- Can see the list of tweetstreams in 'Manage Records'

- Can view tweetstream abstract pages

- Can export tweetstreams

The editor role:

- Can create tweetstreams

- Can destroy tweetstreams that they created

- Can see details of tweetstreams that they created

- Can see the list of tweetstreams in 'Manage Records'

- Can view tweetstream abstract pages

- Can export tweetstreams

The viewer role:

- Can view tweetstream abstract pages (but need to know the URL)

- Can export tweetstreams

These three roles have been assigned to repository administrators, editors and users respectively. This can be changed by modifying this part of the config.

Exporting to a Web Observatory

Tweet data can be exported nightly to a Web Observatory. This is accomplished by inserting tweet records into an external MongoDB database that has been set up by the administrators of the Web Observatory for this purpose.

Configuring a Web Observatory

An example web observatory configuration is visible in the z_tweepository_cfg.pl file. This should be copied to a new .pl file and an entry created for each Web Observatory database that is configured:

$c->{web_observatories} =

{

'websobservatory1' =>

{

type => 'mongodb',

server => 'foo.bar.uk',

port => 1234,

db_name => 'foo',

username => 'username',

password => 'password',

authorised_users =>

[

'username1',

'username2'

]

}

};

To test, use the web_observatory_test.pl script:

perl -I/<eprints_root>/perl_lib /<eprints_root>/archives/<repositoryid>/bin/tweepository/web_observatory_test.pl <repositoryid> <webobservatoryid>

authorised_users

A tweetstream is allowed to be pushed to a Web Observatory if its the person that created the tweetstream is in the list of authorised users.

Configuring a TweetStream

When editing or creating a tweetstream, fill in the Web Observatory Export part of the workflow:

- Export To Web Observatory -- set to 'Yes'

- The ID of the web observatory -- In the above example, it would be 'webobservatory1'

- Web Observatory Collection -- the MongoDB collection that this will be inserted into. Note that:

- More that one tweetstream can be inserted into a collection

- The URL of the tweepository will be prepeneded onto the collection ID. If 'foo' is the collection name, then on the Web Observatory, the collection will be (e.g.) 'http://www.mytweepository.com/foo'

Nightly Export

The web_observatory_push.pl script needs to be added to the crontab to run nightly. It should be run after the update_tweetstream_abstracts.pl script. Note that it is blocked by update_tweetstream_abstracts, so will only start doing actual work when that script has terminated.

Troubleshooting

If this error is observed when harvesting:

DBD::mysql::st execute failed: Duplicate entry '30370867-0' for key 'PRIMARY' at /opt/eprints3/perl_lib/EPrints/Database.pm line 1249.

...particularly after database corruption has been fixed, you'll need to sort out the database with some direct mysql queries. First make a note of the duplicate entry ID. In the above case, it's 30370867 (don't worry about the '-0' part). The problem is that there are some dirty records which need to be removed. Subtract a hundred or so from the Duplicate entry ID (e.g. down to a nice round 30370750) and we're going to remove any data with a higher ID than this.

First, from the tweet table:

mysql> delete from tweet where tweetid >= 30370750;

Then from all 'tweet_foo' tables (the multiple values tables, where the problem actually is).

mysql> show tables like "%tweet\_%";

This will show something like this:

+----------------------------------+ | Tables_in_tweets (tweet\_%) | +----------------------------------+ | tweet__index | | tweet__index_grep | | tweet__ordervalues_en | | tweet__rindex | | tweet_has_next_in_tweetstreams | | tweet_hashtags | | tweet_target_urls | | tweet_tweetees | | tweet_tweetstreams | | tweet_url_redirects_redirects_to | | tweet_url_redirects_url | | tweet_urls_from_text | | tweet_urls_in_text | +----------------------------------+ 13 rows in set (0.00 sec)

Ignore the tables that have '__' in them (tweet__index, tweet__index_grep, tweet__ordervalues_en, tweet__rindex), but repeat the delete command for all other tables:

mysql> delete from tweet_has_next_in_tweetstreams where tweetid >= 30370750; mysql> delete from tweet_hashtags where tweetid >= 30370750; mysql> delete from tweet_target_urls where tweetid >= 30370750; mysql> delete from tweet_tweetees where tweetid >= 30370750; mysql> delete from tweet_tweetstreams where tweetid >= 30370750; mysql> delete from tweet_url_redirects_redirects_to where tweetid >= 30370750; mysql> delete from tweet_url_redirects_url where tweetid >= 30370750; mysql> delete from tweet_urls_from_text where tweetid >= 30370750; mysql> delete from tweet_urls_in_text where tweetid >= 30370750;